For this project, my team programmed a Nomad Scout robot in Java to navigate a maze using sonar sensors. It did not know the layout of the maze, and had to map it as it went:

My main responsibility during this project was working out the kinematics of robot motion required to perform some task, and implementing it in code.

I have no photos, videos or presentations from this project - only code! The class was pure robot programming. However,

Jordan Parsons has kindly let me use his video to demonstrate what took place in the class. It was recut to remove music, since the original could not be embedded:

Perception

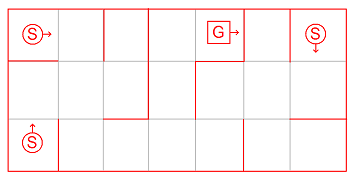

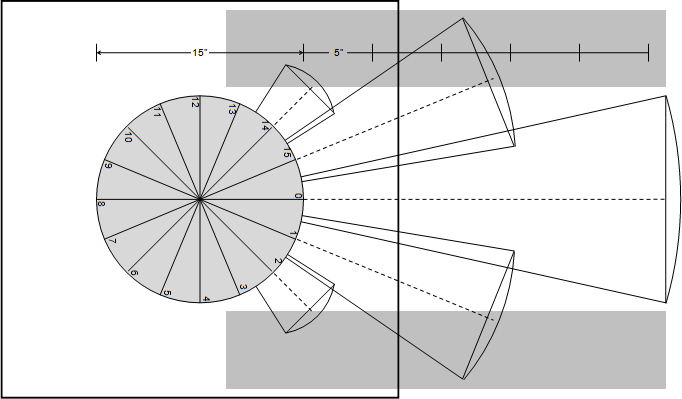

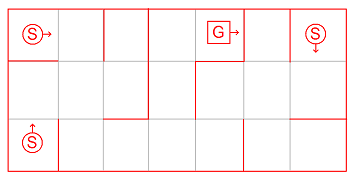

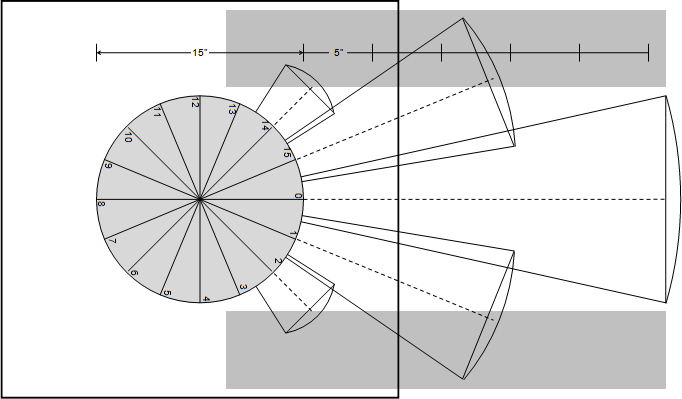

The robots are controlled by a laptop sitting on top, connected via serial. They have a bumper to detect collisions and 16 sonar sensors arranged in a ring:

These allow them to detect obstacles at a distance, but getting the robot to interpret the data was our job. The first order of business was to wander around without running into anything. The above sonar geometry was used to designate a "danger zone" in front of the robot which required it to slow down or turn away. The stopping distance is predicted based on the robot's current speed.

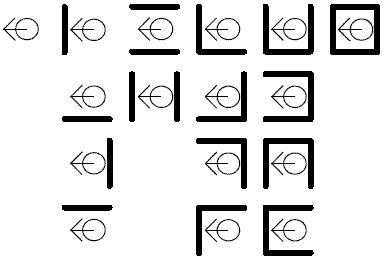

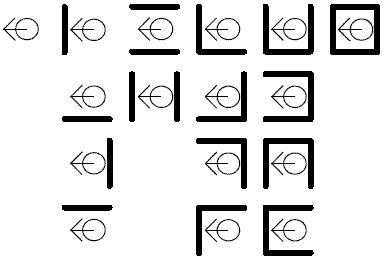

The robot lends itself well to a simplified 2D world constructed of vertical walls, arranged in a grid. For the next task, the robot needed to identify its surroundings and determine its orientation out of these possible configurations:

Sonar is the workhorse perception sensor for cheap robots, but its limitations make this task a challenge. The sensor fires off a sound wave and waits for a response, but it does not detect echos within the first millisecond - so if an object is closer than 3 inches, it will only detect the SECOND echo and record that the object is twice as far away as it really is. It's also possible for sound waves to bounce off of multiple objects before returning to the sonar sensor, overestimating the distance.

This means you need to use clever programming to overcome false readings by integrating data from all sensors to build a cohesive picture of the surroundings. However, the simplified and contrived 2D grid-world makes this task a little easier.

Movement

Getting the robot to move naturally meant implementing a robust PID control loop (Proportional, Integral, Derivative). Getting from the robot's current pose (position and orientation) to a desired one takes more than an angle and a distance - the robot's control loop must constantly adjust wheel velocity to keep itself on course.

Though there are only two variables to set - left and right wheel speed - it takes many layers of autonomy to keep the robot's perception of its surroundings consistant with reality.

To navigate a maze, our robot would:

1. Determine the layout of its current square: whether there was a wall in front of it, behind or to the left and right

2. Decide where to move based on the goal of the lab - exploration, maze solving, mapping or simple wandering

3. Determine how close it is to the center of the square, and adjust its heading to compensate for misalignment

4. Rotate smoothly to face the correct direction

5. Confirm its orientation by calculating the angle of surrounding walls using trigonometry on its sonar data

6. Roll forward the designated distance to traverse one grid square

7. Determine the layout of its new square, adjusting its internal map if necessary

8. Measuring the distance to nearby walls to determine misalignment

9. Repeat

Through subsequent labs these steps were condensed and smoothed out, so that multiple operations could be performed simultaneously or transition smoothly by predicting what the next operation required and using constranied optimization to allow for more gradual velocity changes.

Course corrections could be made without stopping by fitting a curve between desired and current poses (positions and orientations). This gave the appearance of smoother, more natural motion.

The more complicated the code got, the more testing it required to work out the bugs - once, the robot was calmly navigating the maze when it suddenly started spinning in place rapidly and out of control! The emergency stop was hit - we determined that the cause was a singularity in the kinematic path planning code, where dividing by a trigonometric function near zero resulted in near-infinite wheel velocities. Luckily they were in opposite directions!

Maze Solving

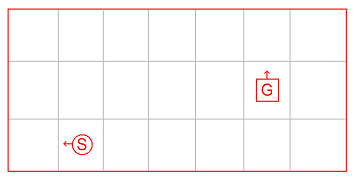

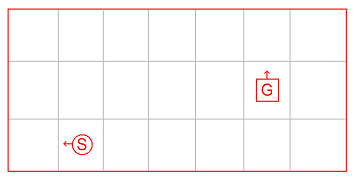

Besides simple navigation, by the end of the course the robot was required to map and solve mazes while traversing them. At the beginning, it would only have a start position and end position, with no knowledge of maze structure.

This was done mostly using decision trees, whittling down a vast number of possible maze configurations down to one as new information was gathered in the course of navigation.

The robot would seek out areas with incomplete information until it could find a path to its goal state.

Admittedly, this was mostly handled by my computer science teammates - I spent most of my time optimizing and improving the movement code.

Nevertheless, the whole experience was a fantastic introduction to mobile robotics, and the challenges of navigating even the simplest and most contrived environments! It gave me a new appreciation for field robotics and a hunger to learn more.